Understand In-Context Learning and Prompt Engineering for LLMs

Understand In-Context Learning and Prompt Engineering for Large Language Models

Hey everyone, today we want to have a look at another aspect of interacting with language models, which can be particularly helpful in making the most out of these advanced tools. This concept, known as in-context learning, is especially useful when engaging in prompt engineering.

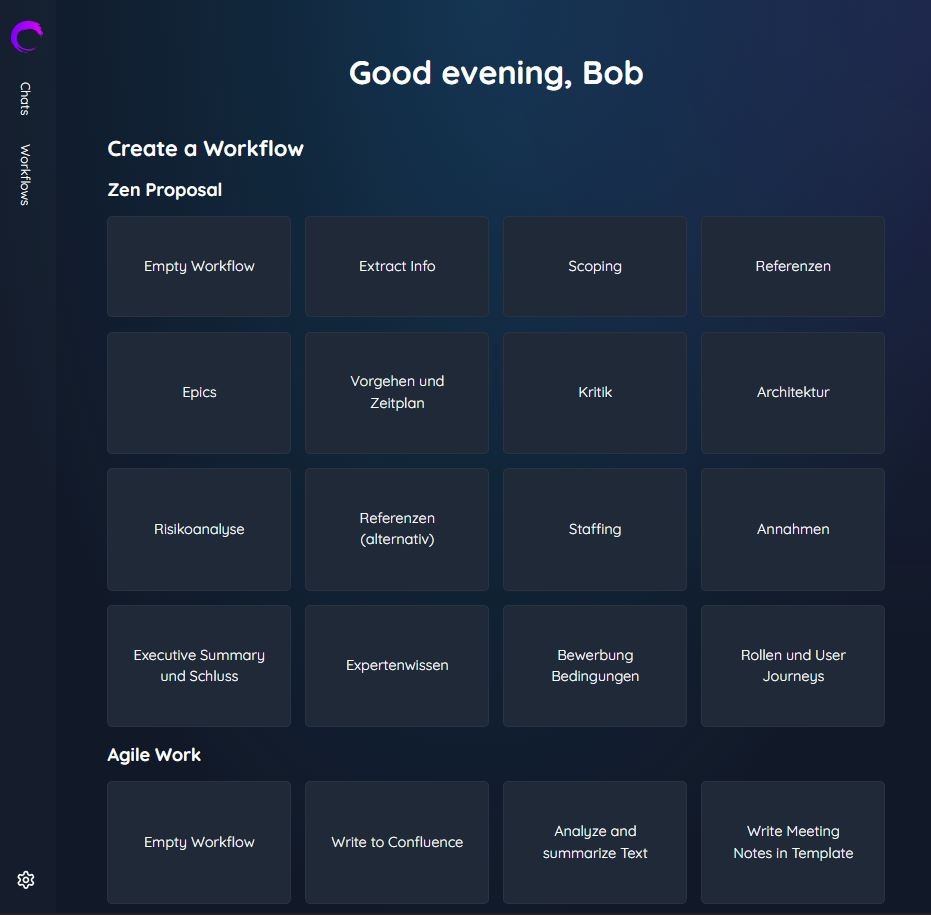

First, what is prompt engineering? Large language models have become increasingly accessible over the last year, especially in no-code AI workflow automation and AI app builder platforms. The quality of their output relies heavily on the structured instructions that we provide. The way we prompt the model, the words we use, the structure we apply, and the specific details we provide all significantly influence the output we can expect. To achieve the best possible results, it's crucial to refine the input we provide—a technique known as prompt engineering.

The prompt is essentially what we feed into the model—the instructions we give. By engineering this prompt to be more effective, we can obtain the best results for the specific task at hand. In this blog post, we'll explore what a prompt is and the components it comprises. We'll also discuss how to utilize a lesser-known technique called in-context learning to enhance the model's output further, making it highly beneficial for AI for business applications.

What is Prompt Engineering?

Prompt engineering is an integral technique in working with language models, especially in no-code AI workflow automation and AI app builder platforms. With technological advancements, these models have become more accessible to the general public, particularly to those who are familiar with natural languages. Essentially, if you speak and write in a language like English or any other natural language, you can interact with a language model, giving instructions in plain language and receiving outputs based on the model’s understanding and processing capabilities.

However, it's important to note that the quality of the output provided by the language model is highly dependent on the structured instructions we give. This means that the way we prompt the model, the language we use, the structure of our inquiry, and the level of detail we include are all crucial. The better the input, the better the output.

Prompt engineering involves crafting and refining the prompt or the input we provide to the model to achieve the highest quality results for the specific task we aim to accomplish. The prompt is essentially a set of instructions given to the model. By carefully engineering these prompts, we can guide the model more effectively, ensuring that the output aligns closely with our expectations and requirements.

In essence, prompt engineering is about improving the input to get the best possible output. It requires understanding how language models interpret and process the given instructions and then using that understanding to craft well-defined and precise prompts. This methodology is vital for various applications in AI for business, from simple text generation to more complex tasks like summarization, translation, and beyond. In enterprise AI settings, mastering prompt engineering can significantly enhance automated processes and decision-making.

Components of a Prompt

To fully understand prompt engineering, we need to break down the components of a prompt. In the context of no-code AI workflow automation and enterprise AI solutions, a prompt is essentially the context we provide to the model, made up of several specific elements:

Input Data: This is the raw data or text that needs to be processed by the language model. It could be a sentence that needs to be translated, a document requiring summarization, or a text needing categorization. The input data is the main subject matter for the model to work on. In an AI app builder, this is foundational for tailoring specific functions.

Examples: Providing examples can significantly help the model understand the task it is supposed to perform. Although language models are trained on massive datasets and have built-in knowledge from their training data, task-specific examples can refine their performance further. For instance, in a translation task, providing examples of translations helps the model deduce how to translate new, unseen data points more effectively. This is particularly useful in AI for business applications where precision is critical.

Instructions: Instructions encode the task you want the model to perform. Clear and precise instructions are essential so the model understands exactly what you expect from it. These should be detailed enough to guide the model without ambiguity, ensuring that the output aligns with your requirements.

Combining these elements effectively forms the context for the language model. By carefully selecting and structuring the input data, providing relevant examples, and crafting clear instructions, you can optimize the prompt to achieve the most accurate and high-quality output from the model. This approach is vital for leveraging AI in business and enterprise AI settings, where effective automation can lead to significant efficiencies.

A Concrete Example: Creating a LinkedIn Post from a Blog Post

To illustrate the concepts of prompt engineering and in-context learning, let's dive into a practical example. Imagine you have written a well-crafted blog post covering a specific topic, and now you want to create a LinkedIn post based on this blog post. If you simply paste your blog content into a language model like ChatGPT, you might find that the generated LinkedIn post doesn’t entirely reflect your personal style. It may sound unfamiliar, overly elaborate, or exaggerated, and not quite match the tone you intended.

To achieve a result that better aligns with your style, you can use in-context learning combined with examples to refine the output. Let's see how this can be done:

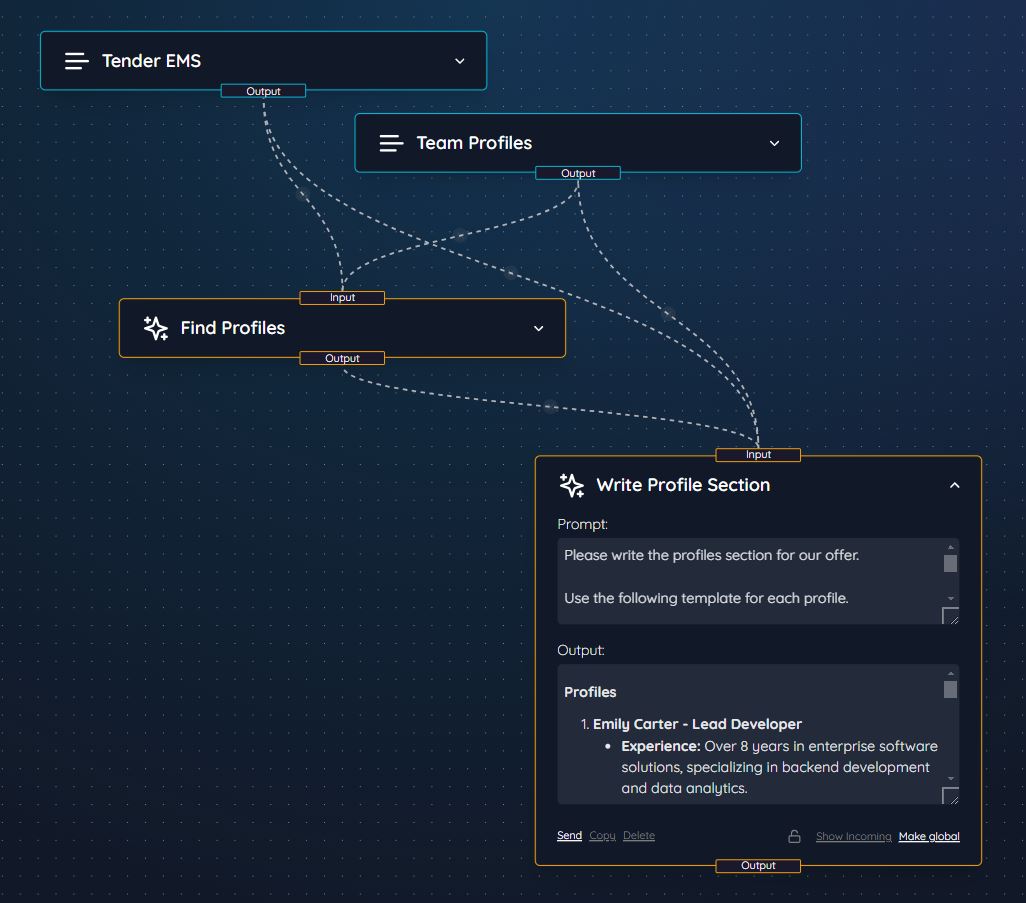

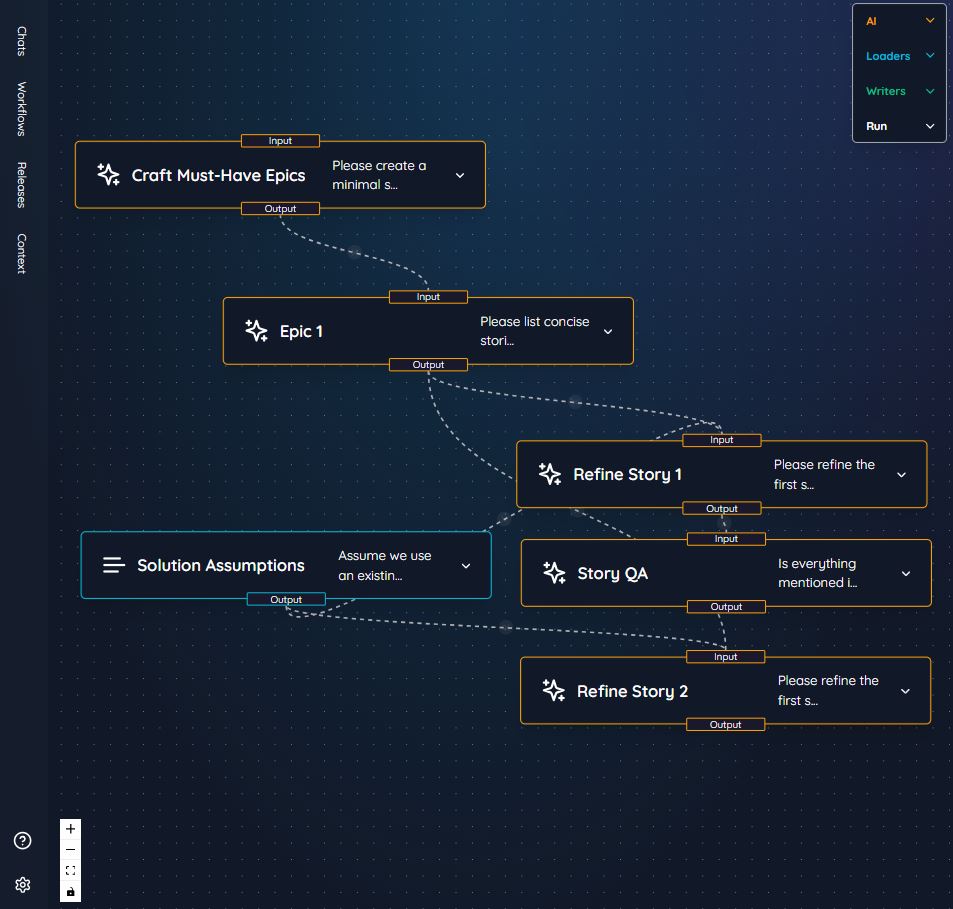

Using the Canvas Board: Tools like the Canvas Board of Zen AI allow you to explicitly define the context for the language model. For instance, you can create notes for your blog post and separate notes for instructions. By linking these notes, you can better structure the prompt.

Defining the Blog Post Context: In one note, you can place the entire text of your blog post. This forms the raw input data that the language model will use to generate the LinkedIn post.

Crafting the Instructions: In another note, you provide clear instructions. For example, your instruction note might read, “Please create a LinkedIn post based on the given blog.” By linking the blog post note to the instruction note, you ensure that the model considers the blog post text when generating the LinkedIn content.

Adding Examples: For a more personalized touch, you can provide the model with examples of past LinkedIn posts you've written. These examples help the model understand your style and tone. You can link these example notes to the prompt structure in the Canvas Board, clearly indicating to the model to draw from these stylistic cues.

By combining your blog post, specific instructions, and examples of your preferred writing style, you provide the language model with a comprehensive context. The context now includes the blog post as input data, examples that illustrate your desired style, and precise instructions for the task at hand.

Using this approach, when you prompt the model, it will generate a LinkedIn post that is more aligned with your personal writing style and preferences. This example showcases how in-context learning and prompt engineering can significantly enhance the quality and relevance of the output from a language model.

Enhancing Output with In-Context Learning

Now that we've outlined the basic components of prompt engineering, let's delve deeper into the concept of in-context learning. In-context learning is a powerful technique where the model learns from the context you provide, which includes examples relevant to the task at hand. This method helps in tuning the model’s output to better match specific requirements.

In the earlier example, we saw how providing examples of LinkedIn posts you previously created helps the model generate text that aligns with your writing style. This principle can be extended to various other tasks to enhance the quality of the results.

For instance, consider a translation task. Suppose you are tasked with translating a document from English to Spanish. By providing the language model with several examples of translated sentences or paragraphs, the model can better understand the nuances required for accurate translations. These examples guide the model on how to approach new, unseen data points, resulting in more precise translations.

Here’s how you can leverage in-context learning to its fullest:

Provide Relevant Examples: Examples specific to the task greatly enhance the model’s performance. For a summarization task, provide examples of how you’ve summarized texts previously. The model will pick up on the structure, tone, and style you prefer in summaries.

Task-Specific Instructions: Alongside examples, clear and concise instructions are crucial. For instance, in a summarization task, you might instruct the model to “summarize the given article in 100 words, maintaining the key points and neutral tone.” This level of specificity helps the model understand both the format and the content expectations.

Iterative Feedback: Another way to improve output is through iterative feedback. Initially, the model might not perfectly capture your style or requirements. By iteratively modifying the prompt—tweaking instructions, refining examples, and providing feedback on the generated output—you guide the model towards better performance.

Fine-Tuning Through Examples: Consistently using examples that reflect variations in style and complexity provides the model with a richer context. Over time, as the model processes more varied and detailed examples, its ability to generate high-quality, relevant outputs improves.

In-context learning essentially enables the model to learn from the specific context you provide, enhancing its ability to produce output that closely matches your expected standard. It’s a dynamic way of interacting with language models, allowing for adjustments and refinements that lead to better overall performance.

Visualizing Context with the Canvas Board

One of the challenges in prompt engineering and in-context learning is effectively managing and visualizing the context provided to the language model. This is where tools like the Canvas Board of Zen AI come into play, offering a structured and visual approach to define and manage context.

Using the Canvas Board, you can make the context explicit by visually mapping out the various components of your prompt. Here’s how you can utilize the Canvas Board effectively:

Nodes as Contextual Elements: In the Canvas Board, each piece of context—whether it's the input data, examples, or instructions—can be represented as a node. For instance, you can create a node for your entire blog post, another for specific examples of LinkedIn posts, and a third for your instructions.

Creating Edges to Define Relationships: By drawing edges (links) between these nodes, you clearly define the relationships and dependencies between different elements. For example, you can link the blog post node to the instruction node, ensuring that the model considers the blog text when crafting the LinkedIn post.

Layering Context: You can layer various contexts by adding or removing nodes and edges, depending on what you want the model to focus on. This flexibility allows you to experiment with different configurations to see which yields the best output.

Instructions Tailored to Context: To make the instructions more effective, you can explicitly reference the nodes in your prompt. For example, you might expand your prompt to, “Please use the given examples to adapt the general style for my LinkedIn post.” This ensures the model uses the examples to inform the style and tone of the output.

Iterative Adjustments: The Canvas Board allows for easy iterative adjustments. If the initial output isn’t quite right, you can tweak the nodes and edges, refine your examples and instructions, and prompt the model again. This iterative process gradually hones the model’s performance.

By visualizing the context, you make it straightforward to manage and fine-tune the inputs provided to the language model. This is particularly useful in complex tasks where multiple layers of context need to be considered. The Canvas Board's visual interface ensures that nothing is overlooked and provides a clear overview of the entire prompt engineering process.

In summary, visualizing context with tools like the Canvas Board can greatly enhance your interaction with language models. It allows you to manage and refine the inputs systematically, leading to more precise and tailored outputs. This visual approach is especially advantageous over traditional chat interfaces, where the context might get lost in extensive chat histories.

Summary and Final Thoughts

To summarize, effectively interacting with language models through prompt engineering and in-context learning can significantly enhance the quality of the output. It requires a thoughtful approach to crafting prompts and providing context, ensuring that the model understands the task at hand and the expectations for the output.

Key Takeaways:

Prompt Engineering: This involves refining the instructions and input data provided to the model. The quality of the output heavily relies on the clarity and structure of the prompt. By carefully crafting and structuring the prompt, you can guide the model to produce more accurate and relevant results.

Components of a Prompt: The prompt consists of input data, examples, and instructions. Each component plays a crucial role in defining the context for the language model. Providing task-specific examples and clear instructions is essential for the model to understand and perform the desired task.

In-Context Learning: This technique involves providing the model with relevant examples and additional context to improve its performance on specific tasks. Examples help the model understand the nuances and expectations, leading to higher-quality output.

Using Tools like the Canvas Board: Visual tools such as the Canvas Board of Zen AI allow for a more structured and explicit definition of context. By mapping out the prompt components visually, it becomes easier to manage and refine the context provided to the model. This visual approach helps ensure that all relevant elements are considered and allows for iterative adjustments to improve output quality.

Practical Application: In our example of creating a LinkedIn post from a blog post, we saw how providing the blog content, combining it with clear instructions, and adding examples of your preferred writing style can significantly improve the generated LinkedIn post. The model learns from the context and produces an output that aligns more closely with your expectations.

In conclusion, mastering prompt engineering and in-context learning is key to leveraging the full potential of language models for no-code AI workflow automation and enterprise AI applications. By understanding these concepts and applying them thoughtfully, you can achieve the best possible results for a wide range of tasks. Whether you're translating text, summarizing documents, or generating personalized content for business purposes, these techniques enhance performance and accuracy.

If you would like to learn more about these concepts or how to use AI app builder tools like Zen AI for your specific tasks, feel free to reach out and connect with us. We're here to help you make the most of these advanced technologies for all your AI for business needs.